A/B Testing

A/B testing is a data-driven approach to testing and measuring different content and experiences to help you make impactful improvements to your site or app.

Prerequisites

To use A/B testing on a content entry, that content must be created and managed in Builder. Content created elsewhere cannot use Builder's A/B Testing feature.

Builder's A/B testing advantages

The advantages of doing A/B testing with Builder include:

- Seamless conversion tracking: Monitor conversions right within the intuitive UI alongside your Builder content.

- Limitless comparisons: Execute numerous comparisons, tailoring your tests to your specific needs.

- SEO friendly: Use search engine optimization efforts while conducting A/B tests.

- Uninterrupted user experience: No DOM/UI blocking and unwanted content flashes.

- Straightforward operation: No coding required—you don't have to be a developer to engage in effective A/B testing.

- Efficient Compression: Leverage gzip compression for optimized performance, minimizing added weight even with multiple test groups.

Despite multiple content pieces being initially sent with the HTML, gzip deflation efficiently eliminates redundancy, resulting in a mere 5-10% size increase. This ensures your page remains fast. Builder extends A/B testing support to all frameworks, including static ones such as Gatsby and Nuxt.

Creating variations

Begin A/B testing by crafting distinct variations of a page or piece of content so Builder can collect metrics for each version.

Tip: Depending on whether a content entry has already been published, the Builder UI workflow might prompt you to make a duplicate content entry.

If the content entry that you'd like to test isn't published yet, you don't need to create a duplicate entry and you might notice just one entry in the content list when you're done.

Both workflows are correct and serve to maintain insight integrity.

To create an A/B test variation:

- In the content entry, click the A/B Tests tab.

- Click the Add A/B Test Variation button.

- Rename your new variation—initially labeled Variation 0 by default—and fine-tune the test ratio. For more variants to perform multi-variate testing, repeat this process.

- Go back to the Edit tab to toggle between variations and make adjustments.

- When you're ready, click the Publish button to initiate the test. If you'd rather schedule it for a future launch, read about scheduling in the Targeting and Scheduling Content.

The video below goes through this process in a previously unpublished entry. If your content entry is already published, the Builder UI will guide you.

Important points to remember

When A/B Testing, keep these things in mind:

- The default variation, known as the control, serves as the baseline exposed to search engines, users with JavaScript disabled, and those with tracking deactivated.

- Once a test is live and receiving traffic, refrain from introducing new variants or removing existing ones. This maintains the integrity of your test results and safeguards them from the influence of previous tests or outdated content versions.

- Allocation follows a random pattern based on the test ratio, tracked with cookies. However, users with tracking disabled remain unassigned to a test group.

For A/B testing with Symbols, visit Symbols.

Interpreting A/B test metrics

Leverage the data from your A/B test to determine the winning variation. Scrutinize conversion statistics across variations, focusing on metrics like conversion rate, total conversions, or conversion value, tailored to your priorities. Allow the test sufficient time to accumulate data and visitors for accurate conclusions.

Tip: For conversion metrics on custom tech stacks you'll need to integrate conversion tracking. Shopify-hosted stores benefit from automatic integration.

To evaluate the performance of your A/B test:

- In the Visual Editor, go to the A/B Tests tab.

- Click View Results beneath the sliders. This directs you to the Insights tab, where you can access comprehensive test results. Initial stages may show limited visitor data, but with time, you'll gain substantial insights into your test's progress.

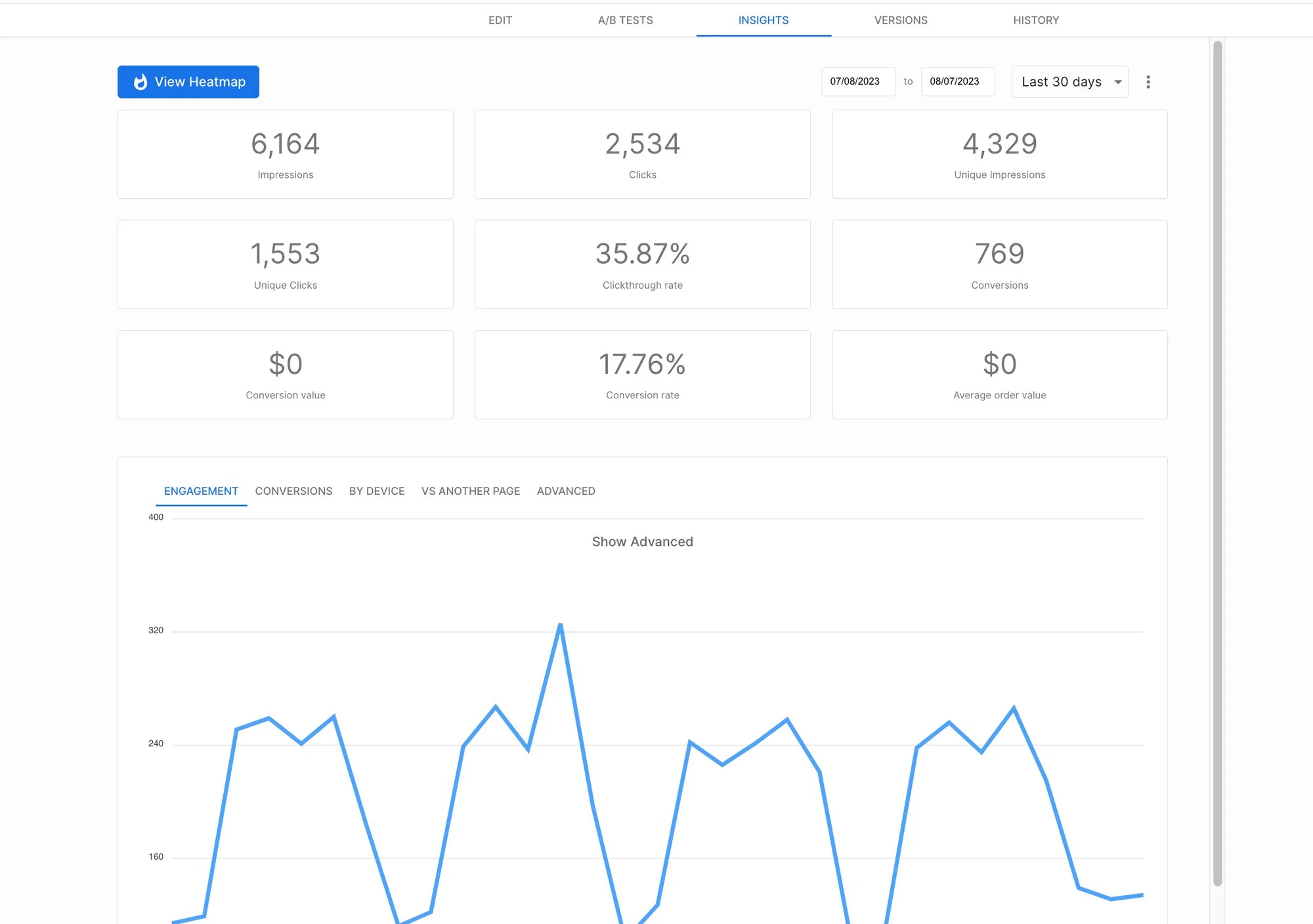

The following is a screenshot of the Insights tab that shows data such as Impressions, Clicks, and Clickthrough rate:

Builder calculates conversions based on impressions; in this way, an impression of Builder content is all that's necessary to lead to a trackable conversion.

Concluding an A/B Test

Once you've determined the winning variation, it's time to implement the improvements. You have a couple of options that you manage from the A/B Tests tab.

Keeping all variations but delivering the winner

Tip: Use this option if you think you might want the test variations later.

This option retains all variations but delivers only the winning variation to users.

Adjust the ratios within the A/B Tests tab to exclusively display the winning variation to new visitors. This ensures that your audience immediately experiences the best UI, and you can keep the other variations for future use.

Ending an A/B Test while removing variations

- This option conclusively ends the test.

- If you don't duplicate this entry before picking a winner, the other variations are not portable. However, if you duplicate this entry first and then come back to choose the winner, the variations are ported to the duplicate.

- The following table outlines what happens to variations depending on whether you choose the winner before or after duplicating:

| When you choose winner | What happens to variations |

|---|---|

| Choose Winner before duplicating | Test ends. Test variations are not ported to duplicate entry. You'll have to create new variations if you need more A/B tests. |

| Choose Winner after duplicating | Duplicate is created before ending test. This means variations are copied to duplicate entry. |

To end an A/B test completely:

- Select the Choose Winning Variation option within the A/B Tests tab.

- In the dialogue that opens, read the details so your choice is informed.

- Click the End Test button.

After ending the test, Builder designates the winner with a checkmark icon. The following video demonstrates choosing the winner.

Statistical significance

As your test accumulates data, Builder computes statistical significance. A checkmark next to a variant's name in the table indicates its impact on conversion, with a gray checkmark representing a p-value within a 90% confidence interval and a green checkmark exceeding 95%.

Note that to use this feature, you must integrate conversion tracking.

Examples of A/B tests

The following are some examples of features you can A/B test:

| General Area | Specific area | Concept | Example |

|---|---|---|---|

| Content | Headers | Vary headers to find out what visitors respond to most | "New Swimwear is Here!" or "Bathing Suits Galore" |

| Content | Copy and designs | Try different copy styles according to your ideal customer persona | "We're digging summer!" or "Luxuriate in Paradise" |

| Design | Colors, design features, fonts | Experiment with button size, shape, style to find out what encourages clicks | Red button with all caps copy or a branded color using a minimal lowercase font |

| Design | CTA position | Different button locations in a content entry | Bottom right or bottom left placement. |

| Design | Hero image | Different hero images to find out which gets more clicks | A hero with a person leaping into a blue sea or a hero of just the sea, without the person |

| Functionality | Form | Experiment different form formats | A form with multiple choice questions or free-form text boxes for written answers |

| Functionality | Responsiveness | Hiding or showing a feature based on device-type to find out if users can use the feature as effectively | Placing search in the hamburger menu or placing it at the top of the page on mobile devices |